Summary

Security and protection from threats from ransomware, should be the first concern for any business trying to maintain infrastructure which covers confidentiality, integrity, and availability (CIA Triad) in this fast-moving world. This article will provide security principles that organisation should adhere to, when adopting a best practise approach to consuming public cloud services on AWS, while using AWS Control Tower. Ultimately forming a well architecture framework, to safeguard the company integrity and data assets.

A poorly designed architecture from the ground up, can be very detrimental and costly, in many fronts. The entire cloud environment can be vulnerable to cyberattacks. Attention to detail is paramount, as an attacker will use any means to brute force his way in. Manual security deployment is a big no!!, for many reasons and a tried & tested deployment for security, should be implemented, from the foundation up and scaled out accordingly, to ensure the relevant Intrusion Detection System (IDS) & Intrusion Prevention System (IPS) mechanisms are in place along the way.

What is AWS Control Tower?

AWS Control came GA (General Available) on 24 June 2019, with the proviso to enable an easy way to set up and govern a new, secure multi-account AWS environment, with a few clicks in the AWS Management Console.

For ongoing governance; organisation adopt pre-configured guardrails, (which are clearly defined rules for security, operations, and compliance), in addition prevent deployment of resources that don’t conform to policies.

It should be noted that Control Tower helps organisation deploy a multi-account AWS environment based on best practices, however, the cloud system administrators are still responsible for day-to-day operations – “Security in the Cloud”. So, at Methods we developed a one click security template to address these concern.

Why is Security so important?

Other than cost, the most important consideration for any business is “Security”. Organisations need to prioritise security as the forefront of any architecture design before any data is placed on the infrastructure. But poor design, lack of technical expertise and legacy infrastructure, has caused such good practises to take a back burner for many organisations.

Security should be defined from top down, rather than bottom up (when an incident occurs). The idea of detective, corrective and preventive actions should be a principle adopted by one and all. The article will discuss how this can be implemented, in collaboration with AWS Control Tower deployment.

Security Implementation for AWS Control Tower

The implication of loss of data, due to negligence, is a major worry for all, regardless of the organisation. So, with AWS Control Tower deployment, 3 x accounts are created as part of the deployment {master, log and audit}. Going forward in this article, we will be focussing solely on the audit AWS account. The following account should be used, where data is streamlined too; from the various AWS services in the AWS Organisation (OU) structure, which in turn leverages all the services which are used as part of AWS Security, Identity, & Compliance portfolio.

To reduce the management of IAM users and account access/privileges, it is preferred to implement AWS Single Sign On (SSO), which allows users to login and be authenticated by MFA (Multi factor authentication) to one single pane window and from there, user will be granted the relevant access and privileges to the various accounts under the OU in the organisation. It should be noted SSO can be utilised with other third-party product, so account authentication/authorisation is under one umbrella. E.g. Jenkins, GitHub, Slack, Office 365 etc

For any AWS environment, be it part of AWS Control Tower or standalone, AWS Security Hub should be enabled as default. It allows the leveraging of various AWS services, by consuming and analysing security findings and placing the information in a single pane dashboard. So rather than going to various screens, all information is displayed in a central point, with the added flexibility of streaming multiple accounts data into one account, so you have a central location for security, which you can restrict access too for security and compliance reasons.

Company asset in the form of data is one of the most important, if not the most important commodity for any organisation and protecting this from malicious intent, loss or prying eyes is critical. So, data stored in S3 (Simple Storage Services), should not be publicly available and should be restricted to privilege individuals using bucket polices, along with AWS KMS (Key Management Services) or Cloud HSM. Both resource polices and KMS key rotation, should be enabled and work in partnership to safe guard data stored in the repository.

Data can be lifecycled to AWS Glacier, so depending on how often the data is accessed, it can be transferred on less costly platforms, which in turn can be protected with locks to safeguard the data integrity and duration. Data should only accessible by authorised personal (e.g. auditors or internal investigation) and monitored using AWS CloudTrail (who is accessing the data) and AWS Config (what action he has performed) for audit reason.

As the infrastructure grows inside the organisation, it gets more and more challenging to monitor and secure the various servers being deployed, be it AWS resources or applications. To alleviate the overheads, it is encouraged to use AWS CloudWatch, which is a real-time monitoring and management service. Metrics alarms can be created from the logs and threshold analysis & notification can be sent out to relevant parties via AWS SNS (Simple Notification Service). In addition, CloudWatch events (detection system) can be introduced which delivers a near real-time stream of events as changes to the AWS environment take place. These event changes can subsequently trigger notifications, or other actions, through the use of rules (prevention system). So any resource or configuration created outside the security criteria outlined by the business or organisation, will be disabled or terminated, which in turn will ensure all users are working with the permitted boundary outlined.

Audit logs for AWS CloudWatch, AWS CloudTrail and AWS Config should be streamlined to the Audit account, so the information is isolated and restricted to privilege individuals and file integrity is monitored & maintained. Files should be stored in AWS S3, where the access is restricted using the relevant access controls (e.g. bucket polices and key encryption) and in addition lifecycled to an archive repository.

If data is accessed or updated from the outside of AWS Control Tower it is recommended to use AWS Web Application Firewall (WAF), to safe guard against malicious traffic infiltration, this can also be absorbed by using AWS CloudFront (CDN), AWS Elastic Load Balancer (ELB), Route53, AWS Guard Duty (DDoS) and AWS Shield.

Moreover, the sensitivity of the data stored in S3, is also paramount in the form of Personally Identifiable Information (PII), so AWS Macie can be used to check what is being stored at rest, (currently only available in Northern Virginia and Oregon). Additionally, AWS Athena an interactive query service, which can be used to analyse data stored in S3, which in turn can be integrated with Amazon QuickSight a business intelligent tool for easy data visualisation.

Users accessing data, should always be tightly monitored be it internally accessed or remotely via VPN, Direct Connect or Internet. The recommendation is to adopt least privileges methodology, with the user being part of a permission set, which is configured in AWS SSO and along with Roles (RBAC), IAM polices or resources polices, KMS or CloudHSM keys and the mandatory guardrails (preventive security controls).

AWS GuardDuty and AWS Shield could be used to capture and detect threats for malicious activity and unauthorised access as part DDoS attacks. This can be further protected with the introduction of AWS Detective, which identifies root causes of potential security issues or suspicious activities via AWS logs. Along with AWS Inspector you can perform due diligence on EC2 hosts, by performing automated security assessments, to evaluate applications for exposure, vulnerabilities and deviations.

It is recommended to use AWS Trusted advisor, which scans the AWS environment infrastructure and the results are compared against AWS best practices -Well Architectured Framework and provides the relevant recommendations. This verifies any security lapses, currently implemented and can be immediately identified and rectified.

Conclusion

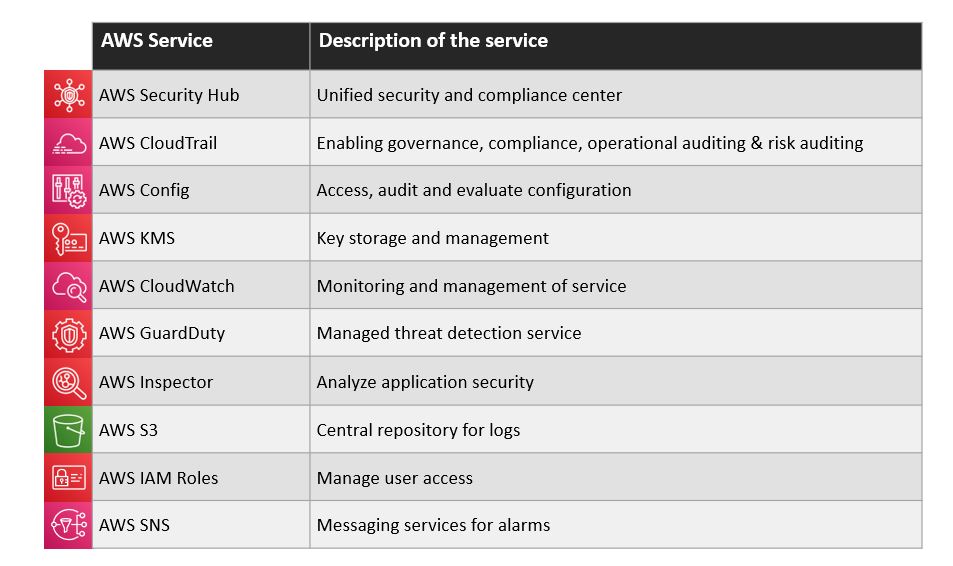

The above AWS services and best practices should be adopted by one and all. Regardless of your architectural setup or business portfolio, the above recommendation would safeguard any organisation. As part of Methods proof of concept of AWS Control Tower, a one-click security implementation was developed in CloudFormation and it deploys & enables the following AWS services seen in Table 1.

The following services should be part of the foundation of any AWS account deployed in the organisation. This will safeguard the company as a whole and most importantly, another layer of security via IaC can be developed and deployed depending on the business e.g. e-commerce website.

Table 1 – Security Services enabled

For more information please see here or speak to Cloud Security Engineer Junaid.Mohammad@methods.co.uk.